With just weeks to go to the election, a new study has revealed major flaws in Facebook’s ability to automatically detect and flag misinformation, allowing pages to continue to spread content even after the company’s own fact-checking partners have labeled it misinformation.

A new study by the digital rights group Avaaz, published on Friday morning, found hundreds of pieces of misinformation that have been tweaked slightly to prevent Facebook’s artificial intelligence system from placing a warning label on them.

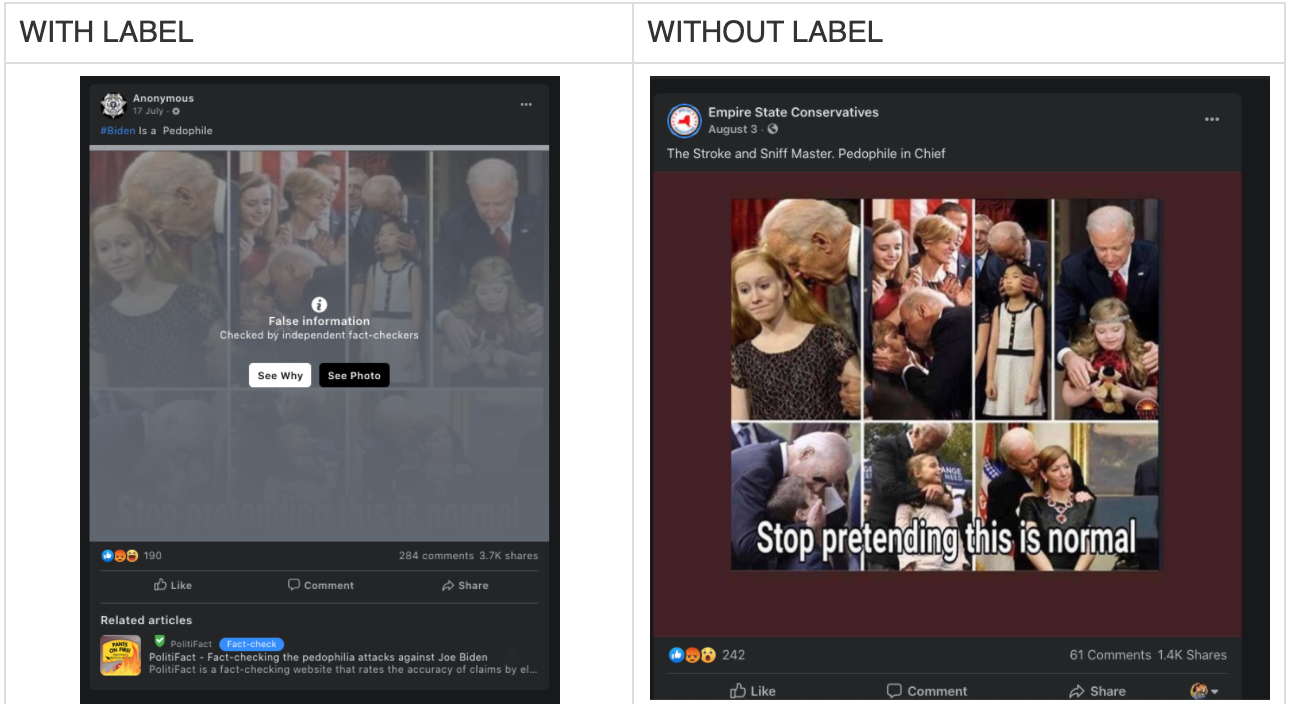

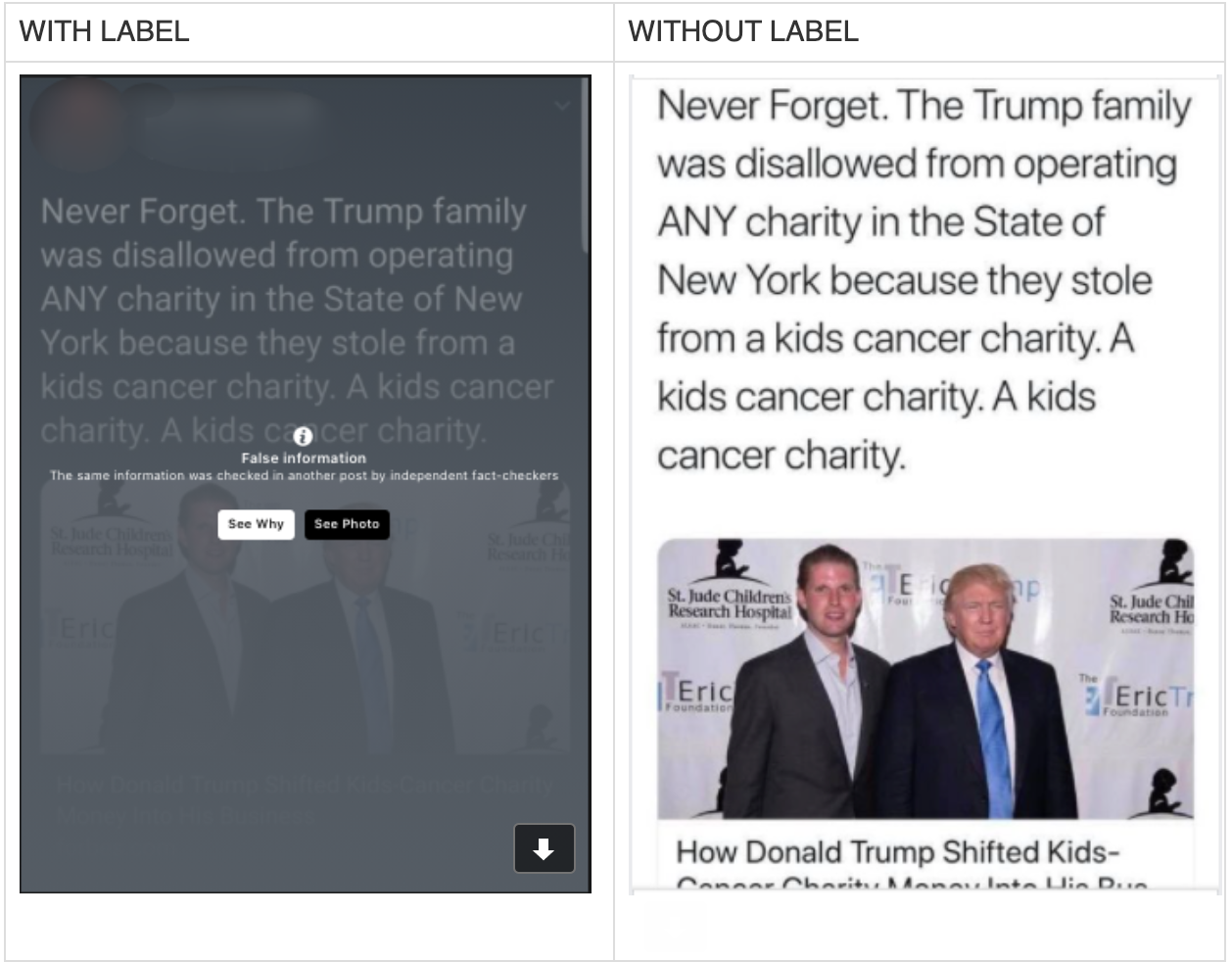

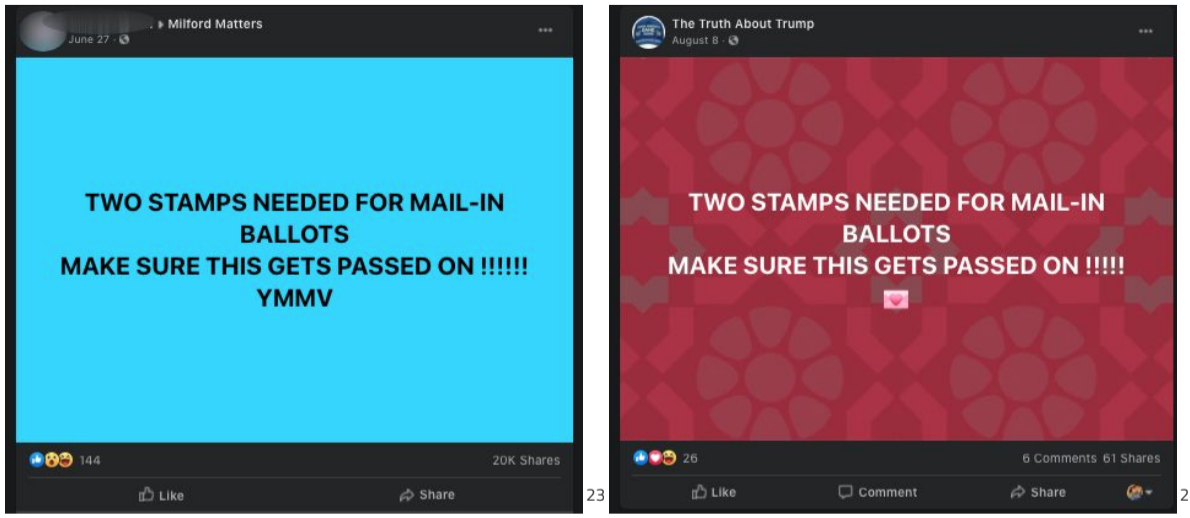

Simple changes — like changing the background color, cropping an image, or changing the color of the text on a meme — are enough to fool the system, Avaaz found.

Researchers found that nearly half of the fact-checked misinformation content (42%) they analyzed is managing to circumvent Facebook's own policies and remain on the platform without a label, earning millions of interactions.

In total, Avaaz found 738 posts that should have a fact-checking label and be down-ranked by Facebook’s recommendation algorithm. Together, they have racked up 5.6 million interactions and an estimated 142 million views.

Coronavirus misinformation continues to be a problem on Facebook, but as the presidential election gets closer, much of the problematic content Avaaz identified is attempting to sway voters towards a particular candidate and threatens to undermine the credibility of the election.

That includes content that attacks both candidates, including misinformation that asserts Democratic presidential candidate Joe Biden is a pedophile and that President Donald Trump and his family stole money from a children’s charity.

But possibly even more concerning for November’s vote is misinformation that seeks to undermine the result of the election by raising questions about mail-in voting. The president himself has played a large part in spreading misinformation about voting by mail.

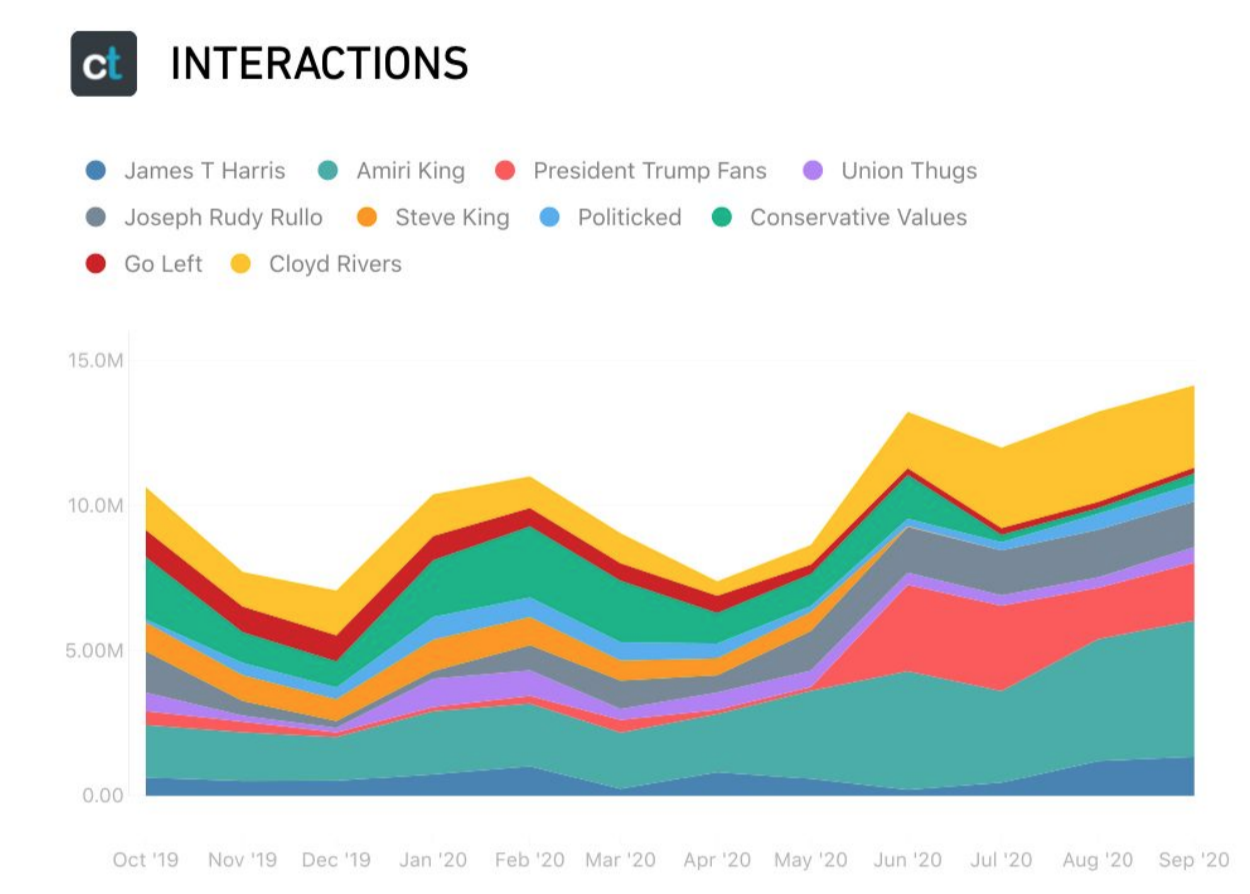

While individual posts are concerning, a bigger blindspot for Facebook’s systems is what Avaaz’s labels “repeat offenders.” These are pages that have posted three or more pieces of content labeled by Facebook’s own fact-checking network as misinformation in the last year. Avaaz found 119 of these pages.

And data from the Facebook-owned analytics tool CrowdTangle shows that interactions with these repeat offenders has risen in the months ahead of the election.

The top page for sharing this misinformation, identified by Avaaz, was comedian Amiri King, who has expressed anti-mask views and tweeted phrases linked to conspiracy theory QAnon.

On Wednesday King announced that Facebook had suspended his account, which has 2.2 million followers. King tweeted that he was going to sue Facebook.

Also among Avaaz’s list of repeat offenders are pages called Conservative Values, Go Left, and President Trump Fans, as well as the Facebook page of Republican lawmaker Steve King, Congress’s most racially inflammatory member, who recently lost his primary.

Facebook has invested heavily in its AI systems which are designed to automatically flag misinformation that has already been flagged by fact-checkers, even if it has been tweaked.

It recently claimed that its AI system is so sophisticated that it is already “able to recognize near-duplicate matches” and automatically “apply warning labels” to the content.

But the problem of lightly tweaked and edited content spreading on Facebook has been a problem for some time. Facebook faced similar criticism in the wake of the Christchurch shooting last year, when multiple versions of the horrific video posted by shooter Brenton Tarrant continued to spread on the network for up to six months after the incident.

At the time, the company promised to do better, but Avaaz’s report suggests its system is still not working the way Facebook claims it is.

“This is both pathetic and not surprising,” Hany Farid, a professor at the University of California, Berkeley, who helped develop PhotoDNA, a technology for detecting online child abuse imagery. “The technology to detect these simple variants absolutely exists, but Facebook continually is unable or unwilling to be more aggressive with reining in misinformation.”

Avaaz flagged its findings to Facebook on Oct. 1, but says that six days later, the social network has labeled only 4% of the 738 false posts and removed just 3%. The other 93% are still on the platform without a label.

“The lack of urgency and narrow scope of action by Facebook to act on the evidence provided is especially troubling,” Avaaz said in its report. “Imagine such false claims going viral just weeks ahead of the elections and Facebook’s detection system doesn’t catch them.”

Facebook told VICE News that Avaaz’s “findings don’t accurately reflect the actions we’ve taken,” adding that it has taken action against “the majority of the Groups and Pages that Avaaz brought to our attention.”

Of the top 10 repeat offenders Avaaz identified in its report, nine pages remain active.

Cover: In this Oct. 25, 2019, file photo, Facebook CEO Mark Zuckerberg speaks at the Paley Center in New York. On Wednesday, July 1, 2020, more than 500 companies kicked off an advertising boycott intended to pressure Facebook into taking a stronger stand against hate speech. Zuckerberg has agreed to meet with its organizers early the following week. (AP Photo/Mark Lennihan, File)

from VICE US https://ift.tt/34CuZK0

via cheap web hosting

No comments:

Post a Comment